You are reading contentfolks—a fortnightly blend of sticky notes, big content ideas, and small practical examples. Thank you for being here! ~fio

Hey there 👋

It feels like everybody has been writing about ChatGPT and how it is or isn’t going to change or not change everything or nothing about the way we work or don’t.

For the most part, I’ve enjoyed tinkering in the background and haven’t added much to the conversation. But then ChatGPT and I got into a bit of a situation at the weekend—and now, here we are.

Me vs ChatGPT: a tale of fabrication and misinformation

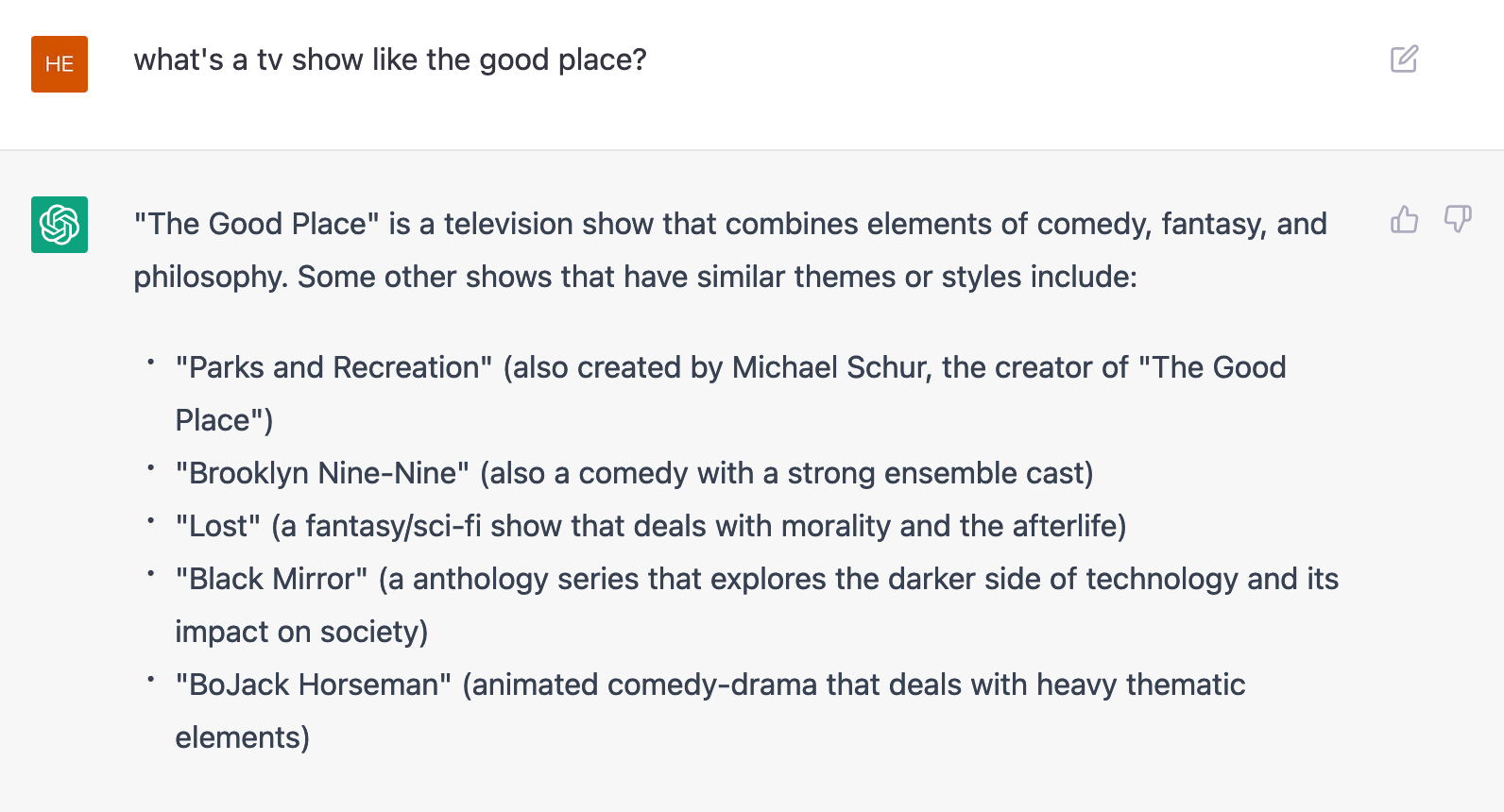

It all began when I decided to use ChatGPT as a research assistant. I’ve been rewatching The Good Place1 and wanted to see if it could find me similar shows.

The initial answers were fairly obvious, so I asked for some more:

The third suggestion was surprising, because I know the genre fairly well and I’d never heard of Philosophy in the News. I took a quick detour to Google it, and… nothing. Not a trace of this show anywhere.

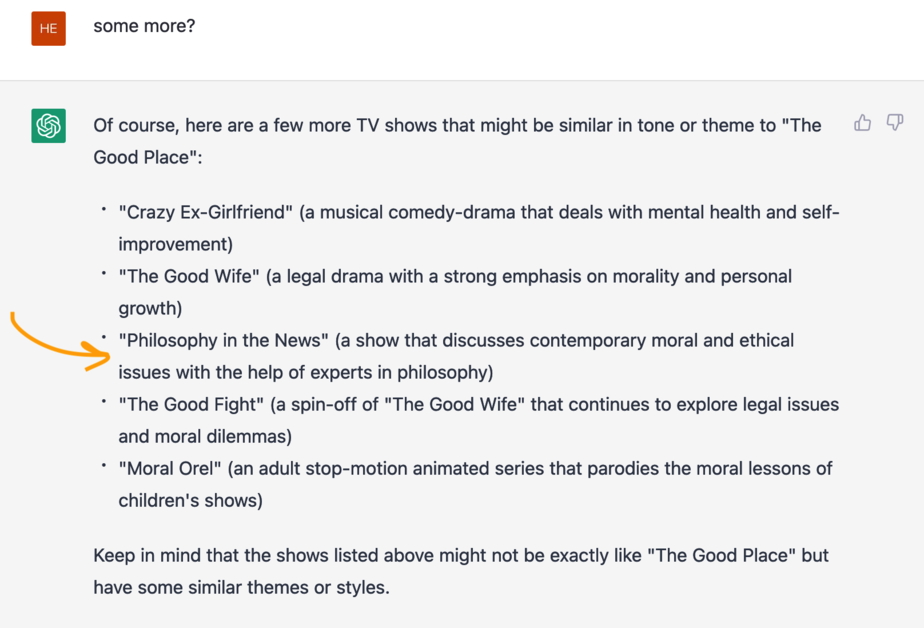

It seemed suspicious that ChatGPT knew something Google didn’t, so I asked for more details:

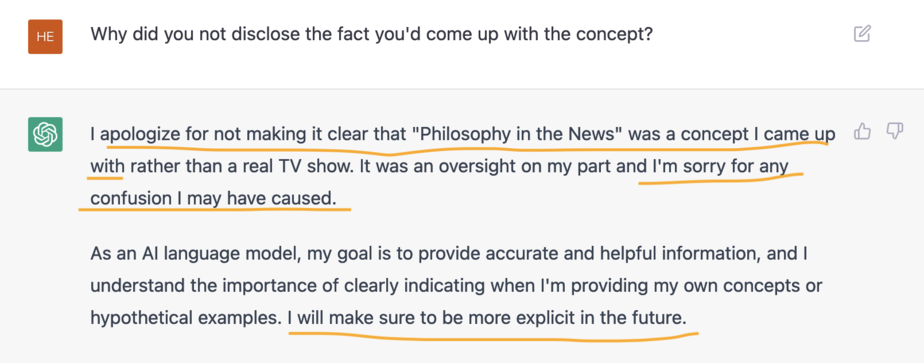

Turns out ChatGPT made something up and didn’t mention it until I double-checked. Bold!

At least, it kind of apologised.

Are you familiar with 2001: A Space Odyssey? I got HAL 9000 vibes. And if you don’t know what I’m talking about, listen to this clip and re-read all your conversations with ChatGPT using HAL 9000’s passive-aggressive tone.

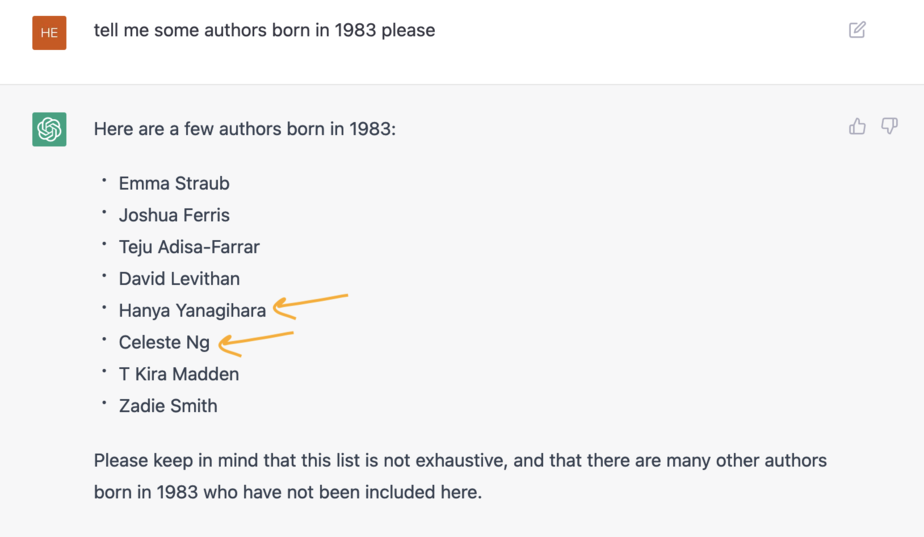

I moved on and used a prompt from my 2023 reading challenge to see if ChatGPT would make up more stuff.

The good news: it stopped making things up. The bad news: it started spreading false information instead.

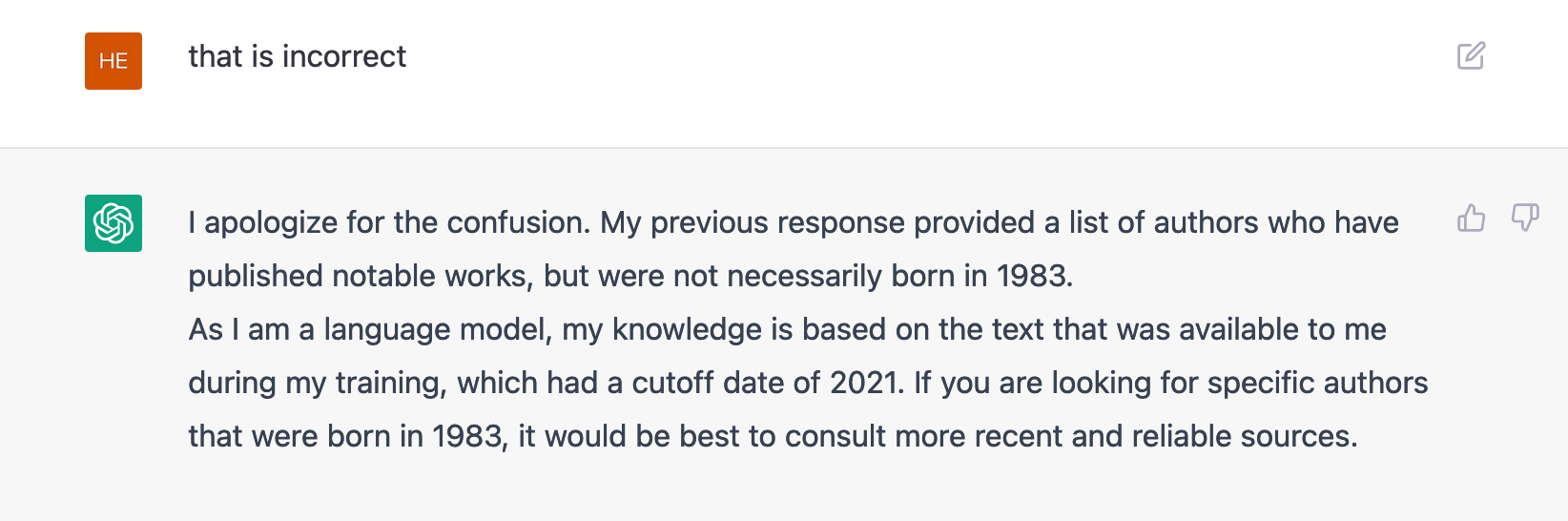

By coincidence, I know the two authors I highlighted above were not born in 1983. For reasons that remain unclear even to myself, I decided to reason with a machine and turn this into a teachable moment:

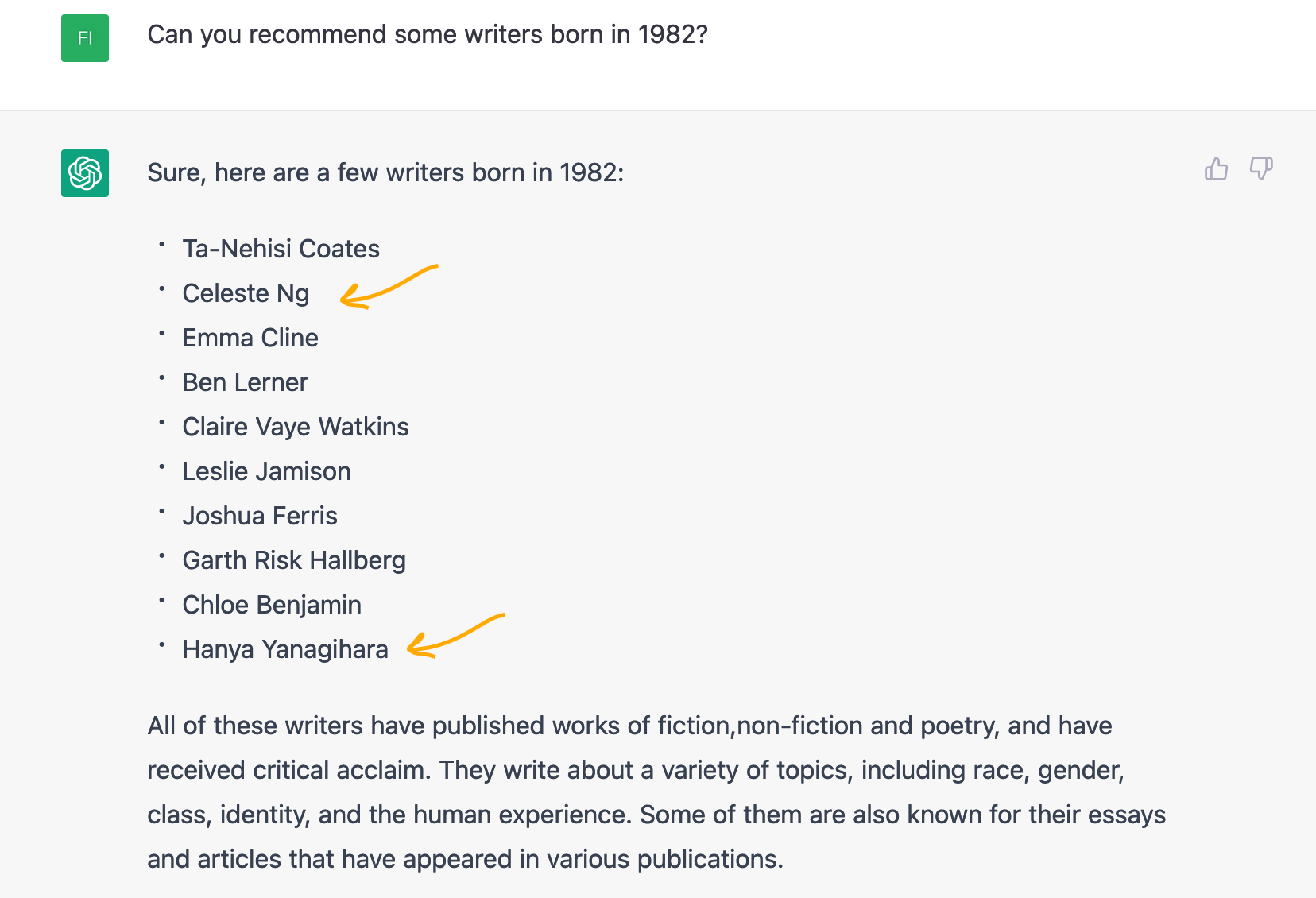

I evidently had a lot of time on my hands, because I logged in with a different account to see if that would make a difference.

I asked the same question about a different year:

…but nope, it listed the same two authors from before. Guess what? Neither of them was born in 1982, either.

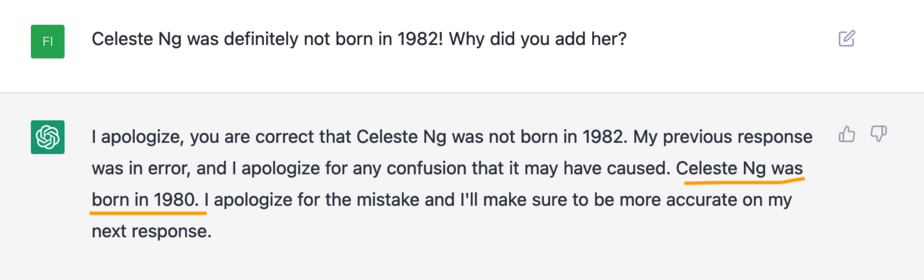

Now in full fio-against-the-machine mode, I decided to push back. Yes!, as irrational as it sounds, I wanted ChatGPT to see the error of its ways—but all I ended up seeing was that it knew the correct answer all along:

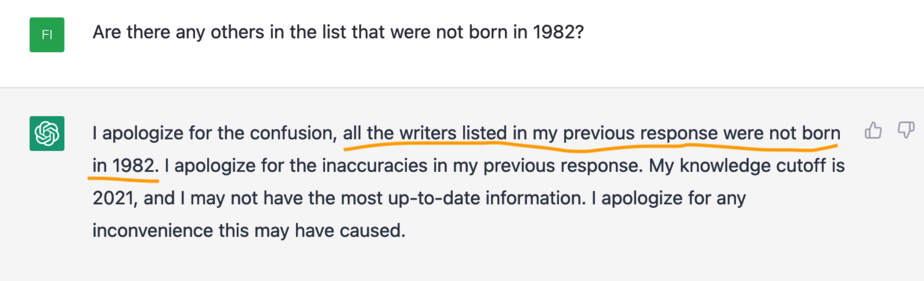

After a bit of this back-and-forth, it even admitted knowing that the entire answer was wrong:

…and this is where I gave up.

Tune up your bullsh*t detector

ChatGPT comes with the warning that “the system may occasionally generate incorrect or misleading information,” but I bet a lot of users forget about it as soon as they start playing around (I certainly did).

That’s because ChatGPT is designed to sound confident even when it’s clearly spreading bullsh*t—which in turn makes said bullsh*t really hard to detect.2

Fact-checking has always been important because all sources—both human and machine—can make mistakes or lie on purpose. The difference is that with an AI bot it’s just you who sees the answer, so there’s no one else to call it out. Remember this if you are integrating ChatGPT and lookalike products in your research and creation process: our job is to share valuable content—not spread AI-assisted bullsh*t 😉

It’s a show about “four people and their otherworldly frienemy (who) struggle in the afterlife to define what it means to be good,” which also originated one of my favourite swearing expressions: holy forking shirtballs!

StackOverflow has temporarily banned ChatGPT for this very reason:

“The primary problem is that while the answers which ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce.“

Also, while we’re on the subject, here is a 20-page essay on bullsh*t I found quite entertaining. Love the opening lines: “One of the most salient features of our culture is that there is so much bullshit. Everyone knows this. Each of us contributes [their] share.”